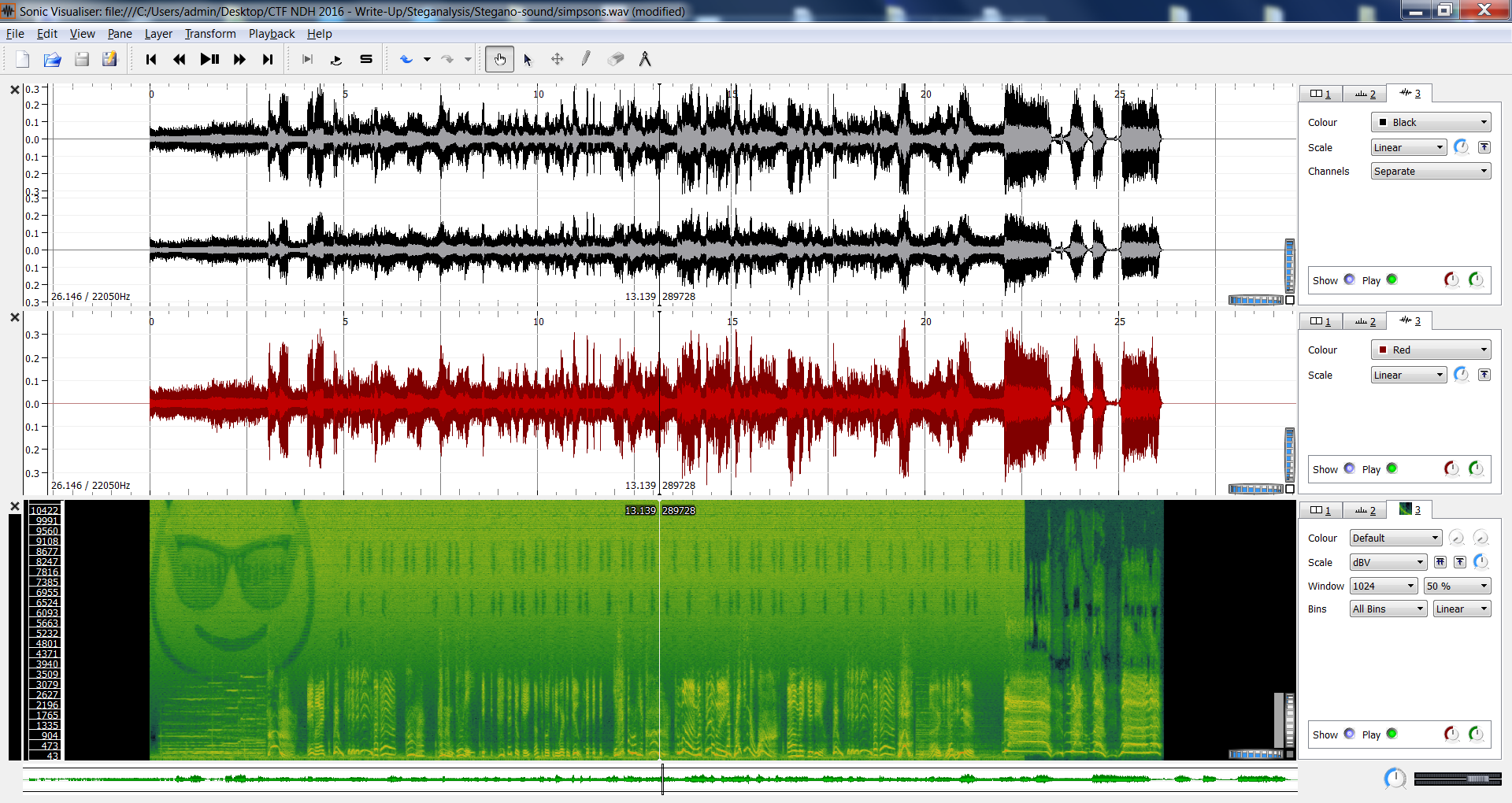

If you change the scalar to 20, you get this image: The decibel scales differ by a factor of two so that the related power and field levels change by the same number of decibels with linear loads. When expressing field (root-power) quantities, a change in amplitude by a factor of 10 corresponds to a 20 dB change in level. That is, a change in power by a factor of 10 corresponds to a 10 dB change in level. When expressing a power ratio, the number of decibels is ten times its logarithm to base 10. Two different scales are used when expressing a ratio in decibels, depending on the nature of the quantities: power and field (root-power). I suspect there may still be some smoothing of some kind (there are references to hops in the code, but I can't quite suss out what they're doing).Īs you noted, your two results differ by a constant factor that is approximately 2.įrom Wikipedia's entry on Decibel (my emphasis): It's still not an exact match, but it's much closer. With the two changes I have mentioned, the graphs compare as follows: I have not found a way to export their spectrum, but I have manually read off the first few values (note, not the dB value) as theirvalues = [ This leads to a change of calculation to s_mag = np.abs(values) * 2 / data_length

#Sonic visualiser export spectrogram code

They appear simply to divide by the size of the window in the code when calculating the magnitude. I have also verified this in the v4.0.1 source code (in svcore/base/AudioLevel.cpp, line 54) double dB = 10 * log10(multiplier) The first big difference is that they are using the "power ratio" definition of the decibel, from this Wikipedia page: Where is the problem with my script, why do I get a different result? The script converts the 32bit float audio file into a dBFS spectrum diagram, using the first 4096 samples as the window, as Sonic Visualizer does. Target_name = audio_file.parent / (audio_file.stem + '.png') Plt.semilogx(x_labels, flat_data, color='tab:blue', label='Spectrum (with filter)') Plt.semilogx(x_labels, s_dbfs, alpha=0.4, color='tab:blue', label='Spectrum')

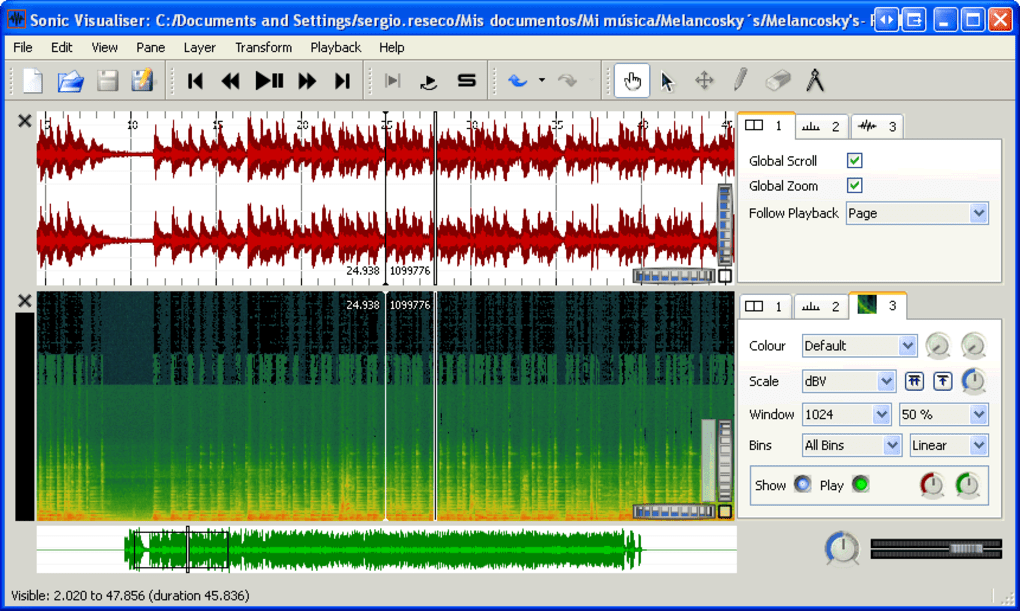

X_labels, s_dbfs = db_fft(data, frequency)įlat_data = savgol_filter(s_dbfs, 601, 3) S_mag = np.abs(values) * 2 / np.sum(weighting)įrequency, data = wavfile.read(str(audio_file)) If I match the curve from Sonic Visualizer to my script's output, it is obvious the conversion of the levels lacks some factor:Ī minimal version of my script, using the 'demo.wav' file above, looks like this: from pathlib import Pathįrequencies = np.fft.rfftfreq(data_length, d=1. So I assume, there is a problem in my script converting the FFT result to dBFS. At 100Hz, Sonic Visualizer is at -40dB and my Script is at -65dB. Now I tried to reproduce this result using my Python Script, but get a different result:Įverything looks right, except the scale of the dB values. Before I started, I analyzed the file using Sonic Visualizer, which got me the following result:

I am working on a script which is creating a spectrum analysis from an audio file using SciPy and NumPy.

#Sonic visualiser export spectrogram download

You can download the 32bit float WAV audio file here. I ask this question in the hope someone will find the problem. It seems I have an issue in the implementation of a function to create a frequency spectrum from an audio file.

0 kommentar(er)

0 kommentar(er)